Claude + Claude Code Safety in Enterprise: Permission Models, Allowlists and Auditability

Claude Code is not a typical chatbot. It is an agentic coding tool that can read repositories, edit files and run commands. That changes the risk profile of governance but also what good governance looks like.

Security teams often focus on data leaving the organisation. However, developer agents introduce a second risk. Actions happen inside your environments. A tool can commit code, modify configs and expose secrets. Therefore, you need a baseline that covers permissions and auditability. You also need a plan that keeps the tool from becoming shadow IT.

This post explains Claude Code enterprise security in plain English. It focuses on permission models, allowlists and evidence you can use in audits. It also shows how Ciralgo can prove whether approved tools replace shadow tools over time.

What Claude Code safety means in enterprise terms

Claude Code is safe enough to scale when these are true.

- Access is controlled with identity and roles.

- Actions are constrained by permissions and allowlists.

- Activity is auditable for incidents and reviews.

- Data handling and retention are documented and enforced.

- Adoption is measured so shadow tools decline.

Why agentic coding tools are different

A coding agent is closer to a junior engineer than a chat window. That is why the control surface is larger.

A wider blast radius than chat

A user can paste a paragraph into a consumer bot. That can leak data. With an agentic tool, a user can also grant tool access. Then the agent can touch these assets.

- Source code repositories

- Local files and build artifacts

- Shell commands and package managers

- Credentials and environment variables

- Internal documentation pulled into context

In practice, many incidents are not sophisticated attacks. They are permission accidents. Someone approves an action too quickly, runs a tool in the wrong directory or adds a third party tool server with broad access.

The security model must cover actions

With agentic tools, the right question is not only what data is sent. You must also ask what the tool can do. That pushes you toward allowlists, sandboxes and least privilege.

The Claude Code permission model

Claude Code is designed to ask before it acts. Still, the quality of your setup matters. Your policies decide how much power the tool gets. Start with the official overview of safeguards in Claude Code Docs: Security. Then translate it into a simple internal model.

Permission prompts and approvals

Claude Code may request permission for actions such as file writes or command execution. Your goal is to reduce risky approvals. Besides, you also want predictable behavior.

A practical baseline is this.

- Default to read only access in sensitive repos.

- Require explicit approval for write actions.

- Treat network access as high risk.

- Keep secrets out of the working directory.

Allowlisting and deny rules

Allowlisting is the enterprise lever. It flips the default from anything goes to approved only. Claude Code supports managed settings that can allowlist MCP servers and control what users can configure. Use Claude Code settings and allowlist guidance as a reference point for how these controls work. A simple policy works well:

- Allowlist trusted tools

- Deny risky commands by default

- Allow exceptions per team with review

MCP servers are part of your supply chain

Claude Code can connect to external tools via MCP servers. That is powerful. It is also a security boundary. Third party MCP servers can fetch untrusted content what can introduce prompt injection risk. Therefore, treat MCP servers like software dependencies. Practical steps that reduce risk:

- Only allowlist MCP servers you trust

- Prefer first party or internally hosted servers

- Document the data each server can access

- Review updates like you review packages

What can still go wrong

Even with good product design, enterprise rollouts fail in predictable ways.

Overscoped access in the dev environment

A developer runs the tool as an admin. The agent gains broad filesystem access. It can read keys, tokens and local caches. Therefore, a compromised session can do more damage. Fix the baseline:

- Do not run as root

- Use dedicated dev accounts

- Keep secrets in a vault

- Use short lived tokens

Prompt injection through tool outputs

Tool outputs can contain untrusted text. That text can manipulate the agent and it can push it to run unsafe commands. This is more likely when the tool fetches issues, PR comments, or web content. Reduce exposure:

- Gate tools that fetch untrusted content

- Require approval for network calls

- Add deny rules for credential access

Silent shadow usage

Developers move fast. They install extensions, they try new tools and they share config snippets. If the approved tool is blocked by friction, shadow tools usually win. This is why you need two tracks

- Make the approved setup easy

- Measure real tool usage over time

For the broader pattern of shadow usage in Microsoft 365 environments, see Shadow AI Microsoft 365 Copilot. The dynamic is similar even if the tools differ.

Procurement and security review for Claude Code

Security review should focus on evidence. It should also focus on integration into your existing controls. Use this section as your procurement baseline.

Identity and access

Ask for enterprise identity support and admin governance.

- SSO options and enforcement

- Role based access for admins and users

- Controls for who can configure tools

- Controls for who can install MCP servers

Audit logs and incident readiness

Ask what is logged and how you can use it.

- User attribution for activity

- Visibility into permission approvals

- Evidence for changes in settings

- Export options for SIEM workflows when available

If you cannot get logs you can trust, you cannot investigate. That is a hard stop for regulated teams.

Retention and data handling

Retention is not only a privacy topic. It is also an incident topic. Ask these questions:

- How long are transcripts kept

- Can admins set retention windows

- Can users delete history

- What happens to deleted data

For general retention context, Anthropic publishes a retention explainer in the Anthropic Privacy Center retention article. Enterprise terms can differ, so validate your tier and contract.

Security posture and certifications

Ask for proof, not slides. Start with the Anthropic Trust Center. Confirm what certifications apply to the product tier you use. Then request the artifacts you need.

- SOC reports if available

- ISO certificates if available

- Pen test posture and scope

- Security contact and incident process

Mini scorecard table

Use this mini scorecard for a fast go or no go decision.

|

Control area |

Baseline target |

What to verify |

|---|---|---|

|

Access control |

SSO and least privilege roles |

Enforcement and admin scope |

|

Permission model |

Prompts for high risk actions |

Approval flows and defaults |

|

Allowlists |

Approved tools and MCP servers only |

Managed settings and policy coverage |

|

Auditability |

Logs usable for investigations |

User attribution and export options |

|

Retention |

Clear retention policy |

Admin controls and deletion behavior |

|

Security evidence |

Third party assurance |

Trust Center artifacts and scope |

Checklist for a safe Claude Code rollout

Use this checklist for your pilot and scale plan. Keep it simple. Then iterate.

- Define approved use cases and forbidden data types.

- Enforce SSO and restrict admin roles.

- Require least privilege on dev machines and CI tokens.

- Block running the tool as root in managed environments.

- Set a default policy for permission approvals.

- Start with read only mode in sensitive repos.

- Add allowlists for trusted commands and tools.

- Deny risky commands by default.

- Allowlist MCP servers and document each server scope.

- Prefer internally hosted tool servers where possible.

- Require explicit approval for network access.

- Separate secrets from working directories.

- Define what gets logged and who reviews it weekly.

- Define retention and deletion expectations.

- Train the pilot group with real scenarios.

- Publish a one page safe usage guide.

- Review friction points and remove them quickly.

- Measure shadow tool usage trends and adjust policy.

For a broader cross tool framework, link this checklist to AI Tool Safety Scorecard (2026). For a governed place to standardise prompts and agent patterns, use AI Studio.

How Ciralgo helps when developer tools go shadow fast

Developer tools spread through teams faster than procurement can react. That is normal. It is also why shadow usage persists even after you approve a tool. Microsoft and vendors provide the control layer. That includes identity and product settings. However, most organisations still lack visibility across tools.

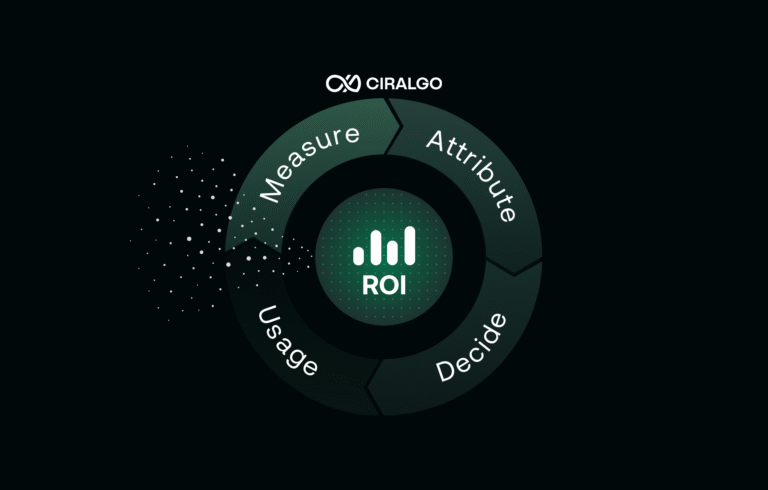

Ciralgo is the AI adoption analytics platform. It shows which AI tools are used across teams. It also highlights cost signals and risk clusters. That matters because you can measure whether Claude Code adoption reduces shadow tools. You can also spot duplicate spend and risky hotspots.

If you want a clear governance story, publish your approach on Security & Trust. Then use adoption analytics to show progress. In parallel, keep your approved tool register aligned with real usage.

FAQ

Closing

Claude Code can deliver real productivity. It also changes your threat model. With a permission baseline, allowlists and usable logs you can scale safely. Without them, the tool will either get blocked or go shadow.

If you want to review your current developer AI usage and design a safe rollout plan, Book a 20-min call.