From shadow AI to secure copilot: A practical guide for Microsoft 365 Admins

If you are a Microsoft 365 admin, you’re probably stuck between two realities: employees quietly using consumer AI tools with company data and leadership asking you to make AI safe. The phrase shadow AI Microsoft 365 Copilot might already be popping up in risk memos, vendor pitches and board slides.

This guide walks you through a pragmatic path: discover shadow AI, contain it with Microsoft 365, Purview and DLP and then give employees a tightly governed Copilot environment. At Ciralgo, we often see Microsoft 365 admins trying to connect the dots between policy, Copilot rollout and what people actually do with AI. This guide walks through that journey.

What shadow AI looks like in a Microsoft 365 organisation

“Shadow AI” is the unsanctioned use of AI tools or applications by employees without IT or security approval, the AI version of shadow IT.

In a Microsoft 365 context, that typically means:

- A sales representitive pastes a draft contract into a personal ChatGPT or Claude account to “clean up the language”.

- A finance analyst uploads internal spreadsheets to a random AI add-in they found in the Chrome Web Store.

- A manager uses a personal Gmail account to sign up for an AI meeting assistant that records internal Teams calls.

- Developers use consumer code assistants, copying in proprietary codebases.

Recent surveys show a majority of employees use unapproved AI tools for work and many admit sharing sensitive company data, including customer data, internal documents and even source code. Gartner now predicts that by 2030, 40% of enterprises will experience security or compliance breaches due to shadow AI. We see the same pattern in many of the organisations we work with, you can find examples in our AI adoption case studies and guides.

Typical shadow AI patterns in Microsoft 365

Inside a Microsoft 365 tenant, shadow AI use usually clusters around:

- Content creation and editing

Users move text out of Word, Outlook, or Teams and paste it into consumer tools to summarise, rewrite, or translate content. - Data analysis

People export CSVs from Excel or Power BI, then upload them into external AI tools for “quick insights”. - Meeting and communication helpers

Employees install third-party meeting bots or browser extensions that read Outlook, Teams, or SharePoint content. - Personal accounts on enterprise devices

Staff sign into AI tools with personal identities (e.g. personal Microsoft, Google, or OpenAI accounts), bypassing your Entra ID controls.

Why shadow AI is a problem

Shadow AI is not just “a bit naughty”. It creates concrete risks:

- Data leakage & loss of control

Sensitive information leaves the Microsoft 365 boundary and ends up in third-party services with unknown storage, access, or training policies. - Compliance and regulatory exposure

You can’t demonstrate where data went, who accessed it, or whether you had a legal basis for processing. That’s uncomfortable under GDPR and even more so under the EU AI Act for high-risk scenarios. - No audit trail

Security investigations hit a dead end, because activity happened outside your SIEM, Purview audits and M365 logs. - Fragmented productivity

Every team picks their own tools and no one knows which workflows actually work well enough to standardise.

Your job as a Microsoft 365 admin is not to eliminate AI usage but to move it from uncontrolled to governed and ensure it stays there.

Microsoft 365 Copilot: Part of the answer, not the whole answer

Microsoft’s own narrative is clear: Copilot should help “bring AI out of the shadows” by giving employees a secure, integrated alternative to consumer AI tools. The good news is that Microsoft 365 Copilot is wired into your existing identity, permissions and compliance stack rather than sitting outside of it.

Shadow AI Microsoft 365 Copilot: what Microsoft gives you out of the box

When you deploy Microsoft 365 Copilot:

- Access is governed by Entra ID (Azure AD) and your existing authentication/conditional access controls.

- Data comes via Microsoft Graph, respecting the same SharePoint, OneDrive, Exchange and Teams permissions you’ve already configured.

- Data Loss Prevention (DLP) and sensitivity labels can control which content Copilot can access or surface and even stop Copilot responses when prompts involve highly sensitive data.

Microsoft has even published a deployment blueprint specifically on preventing data leaks to shadow AI using Purview, Defender for Cloud Apps, Entra and Intune, structured around phases such as discovering AI apps and blocking unsanctioned ones. That is already a step into the right direction but that does not solve shadow AI on its own. You still need:

- Policies: clear rules on which AI tools are allowed, tolerated, or prohibited.

- Training: users need to understand why Copilot is safer and how to use it.

- Visibility: telemetry on AI usage inside and outside Microsoft 365, so you can see whether your controls are working.

Step 1 – Discover current shadow AI usage

Before you “stop shadow AI with Microsoft Copilot”, you need to understand what you’re dealing with. A hard ban without insight usually just pushes shadow AI further underground.

Use your existing security and network stack

Practical discovery methods include:

- Proxy / firewall / secure web gateway logs

Look for access to well-known AI domains (chat.openai.com, claude.ai, etc.) and browser extension update URLs. Group by department, device type and identity where possible. - Microsoft Defender for Cloud Apps / CASB

If you use Defender for Cloud Apps (or another CASB), enable discovery for AI and productivity tools. Many CASBs now have specific categories for generative AI and can flag unsanctioned usage at scale. - Endpoint management (Intune)

On corporate devices, inventory browser extensions and installed AI clients. Flag those that can read corporate data (e.g. access to tabs, clipboard, file system).

Talk to people

Technical discovery is essential, but shadow AI is also a cultural phenomenon:

- Run quick, anonymous surveys asking what AI tools people use, for which tasks and why Copilot (or other approved options) don’t meet their needs.

- Interview “key users” who are most likely to experiment, they often become your best internal champions later.

- Ask managers where they see the biggest productivity bottlenecks. Shadow AI often clusters around painful workflows.

The goal here isn’t to shame anyone. It’s to build a picture of:

- Which jobs to be done people solve with shadow AI.

- Which teams are most exposed.

- Which tools and domains show up frequently.

From there, you can design a more credible alternative than “just don’t”.

Step 2 – Define approved, tolerated and prohibited AI tools

Once you know what’s happening, formalise it.

At minimum, build an internal AI tool register with three categories:

- Approved

- Microsoft 365 Copilot and Copilot Chat.

- Specific enterprise AI SaaS with contracts, DPAs and security reviews.

- Internal bots and agents built on your own infrastructure.

- Tolerated (with limits)

- Low-risk tools used on non-sensitive data only.

- Temporary usage while you work on an approved alternative.

- Tools limited to a sandbox tenant or a specific pilot group.

- Prohibited

- Consumer AI tools with unclear or incompatible data usage terms.

- Any tools requiring upload of personal data, confidential docs, or code without a formal review.

- AI features accessed via personal accounts on corporate devices.

This is where you can naturally introduce phrases like shadow AI Microsoft 365 and shadow AI Microsoft Copilot in your internal documentation, framing Copilot as the “front door” and shadow AI as the risky side door.

Communicate like you’re trying to win users, not a court case

For adoption and trust, communication matters as much as policy:

- Plain language summaries

Translate policies into a one-pager: “What’s allowed, what’s not and why we prefer Copilot Chat vs shadow AI tools.” - Concrete examples

Don’t just say “don’t share confidential data externally”. Instead, show examples: customer contracts, employee performance reviews, M&A decks, etc. - Two-way feedback

Offer a channel where users can request new tools or exceptions. This helps you spot emerging shadow AI patterns early.

Step 3 – Use Microsoft 365, Purview and DLP to contain risky AI usage

With a policy in place, you can start using the Microsoft stack to reduce shadow AI data leakage Microsoft 365 risks. Think in layers: identity, device, network and data.

Identity & access (Entra ID and conditional access)

- Require strong authentication and compliant devices for accessing M365 and internal web apps.

- Use conditional access to block or limit access to risky AI apps from corporate identities, especially if they don’t support SSO or enterprise controls.

Device & browser controls (Intune / Edge)

Manage corporate devices with Intune and enforce browser baselines (e.g. Microsoft Edge with enterprise controls).

Use Edge for Business and its integration with Microsoft Purview to govern data copied to web forms, including AI prompts.

Network & SaaS discovery (Defender for Cloud Apps / CASB)

Configure Defender for Cloud Apps (or equivalent) to:

- Discover AI tools in use.

- Mark sanctioned vs unsanctioned tools.

- Block or coach users when accessing prohibited AI domains.

Data-level protection (Purview & DLP)

This is where you really stop shadow AI with Microsoft Copilot plus the broader Purview stack:

- Use sensitivity labels on your most important content (e.g. Highly Confidential, Restricted).

- Configure Microsoft Purview Data Loss Prevention (DLP) policies that:

- Prevent copy/paste and upload of highly sensitive data into browser forms for unsanctioned AI domains.

- Inspect prompts sent to sanctioned AI (including Copilot) and block responses when certain sensitive data types are involved.

The aim isn’t to micro-block every action: it is to make the riskiest behaviours like dumping full customer databases into unknown tools technically hard and auditable.

Step 4 – Provide a governed Copilot environment as the default alternative

You don’t stop shadow AI simply by saying “no”. As an IT professional you show colleagues that there is a better and safer alternative. Microsoft 365 Copilot is one of the options, but the rollout needs to be deliberate.

Phase 1: Pilot with the right people

- Start with a small, mixed group: IT, security, legal/compliance and a handful of business power users (e.g. Sales, HR, Operations).

- Limit Copilot to a well-curated data estate: clean SharePoint sites, Teams and OneDrive locations with good permission hygiene.

- Track:

- Which prompts people use most.

- Where Copilot saves the most time.

- Where users still fall back on external AI tools.

Phase 2: Scale by department and use case

As you gain confidence:

- Expand Copilot to more users, focusing on specific workflows (e.g. drafting proposals, summarising meetings, analysing customer feedback).

- Use your AI tool register and training to explain why Copilot chat vs shadow AI tools is the safer choice for these scenarios:

- Same or better quality of output.

- No need to move data outside M365.

- Activity lives inside your compliance boundary.

Phase 3: Build training and guardrails into onboarding

- Offer short, scenario-based training: “10 safe ways to use Copilot in your role”.

- Create “Do / Don’t” patterns side-by-side (OK: summarise this internal document in Copilot Chat; NOT OK: upload the same document to your personal chatbot).

- Keep reinforcing that the organisation wants people to use AI but in a way that does not create tomorrow’s breach headline.

Throughout these phases, you can naturally refer again to shadow AI Microsoft 365 Copilot in your internal comms: the story becomes “we’re shifting from shadow AI to secure, governed Copilot as our standard”.

Step 5 – Add AI adoption analytics on top

Even with good policies and controls, you still need continuous visibility:

- Are people actually using Copilot?

- Which teams still rely on shadow AI tools?

- Which workflows see real productivity gains?

- Where do we have unexpected risk hotspots?

This is where Ciralgo’s AI adoption analytics platform sits on top of your Microsoft 365 environment. It doesn’t replace Copilot or Purview, it gives you the missing picture of how AI is used across tools and teams.

What an AI adoption analytics platform can do

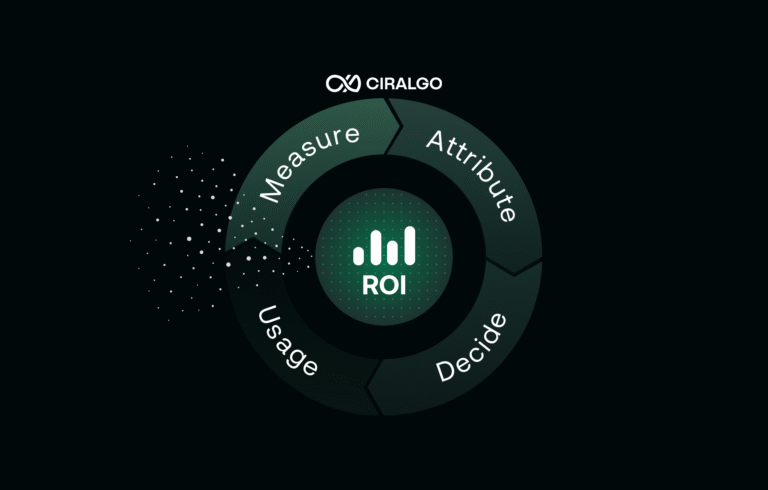

A platform like Ciralgo’s AI adoption analytics can:

- Map AI usage across your toolset

Correlate signals from Microsoft 365 (Copilot, Teams, Outlook, SharePoint) and other applications to see where AI shows up in day-to-day work. - Distinguish first-party vs shadow AI

Show you the split between:- Microsoft 365 Copilot and other approved tools.

- External/shadow tools discovered via network and security telemetry.

- Track the journey from shadow AI to Copilot

Visualise over time:- Shadow AI usage declining as policies and blocks kick in.

- Copilot usage increasing as you roll out licences and training.

- Productivity metrics (e.g. time saved per workflow) improving in parallel.

- Support governance, risk and compliance

Provide a trail of how AI is used across the organisation, which supports the EU AI Act’s emphasis on monitoring, logging and post-market oversight for higher-risk AI use cases.

Because Ciralgo is EU-first in its data residency approach, hosting on EU cloud infrastructure with strong alignment to GDPR and the EU AI Act, it can help European organisations (and non-EU companies with EU users) adopt AI without losing sleep over where their analytics data lives.

The mindset shift is important: AI governance isn’t a one-off project; it’s an ongoing feedback loop and you need analytics to close that loop.

EU nuance: GDPR, EU AI Act and beyond

If you operate in or touch the EU, you can’t ignore the regulatory angle. A few realities:

- GDPR still applies

Monitoring AI usage often involves processing personal data (e.g. user identities, prompts, documents). You need:- A clear legal basis (often legitimate interest).

- Data minimisation (collect only what you need).

- Transparency to employees about what’s logged and why.

- EU AI Act raises the bar for certain use cases

The AI Act places extra obligations on high-risk AI systems, including logging, monitoring and human oversight. Most Copilot-style productivity usage won’t be high-risk by default, but:- If you start using AI for HR screening, credit decisions, or other regulated processes, you might cross that threshold.

- Having solid usage analytics and documentation makes compliance conversations much easier.

- Non-EU organisations are not exempt

If you have EU users, customers, or employees, many GDPR and EU AI Act obligations can still apply in practice.

The good news: the same governed Copilot + analytics approach that reduces shadow AI risk also gives you better evidence, logs and documentation for regulators and auditors.

Checklist – From shadow AI to secure Copilot

Here’s a practical checklist you can copy into your internal project plan.

- Assess current shadow AI usage

- Use proxy, firewall, CASB and endpoint data to identify AI domains and tools.

- Run quick surveys and interviews to understand why people use shadow AI and for which workflows.

- Define approved vs unapproved AI tools

- Create an AI tool register: Approved, Tolerated, Prohibited.

- Publish a simple “AI usage policy” in plain language, with clear examples and FAQs.

- Configure Microsoft 365 / Purview / DLP

- Harden Entra ID, conditional access, device compliance and browser policies.

- Use Defender for Cloud Apps (or equivalent) to discover, sanction and block AI tools.

- Implement Purview sensitivity labels and DLP to limit uploads of sensitive data to unsanctioned AI and control what Copilot can access.

- Roll out Copilot as the governed default

- Start with a mixed pilot group and a clean, well-permissioned data estate.

- Scale by department and use case, pairing licences with short, scenario-based training.

- Emphasise why Copilot chat vs shadow AI tools is safer and more sustainable.

- Add an AI adoption analytics layer

- Deploy an AI adoption analytics platform (e.g. Ciralgo) to map AI usage across Microsoft 365 and beyond.

- Track how shadow AI usage changes as you roll out Copilot and policies.

- Use analytics to demonstrate productivity gains, surface risk hot spots and support GDPR / EU AI Act documentation.

If you want to move from “we think people use Copilot” to “we know exactly how AI shows up in our Microsoft 365 tenant, where shadow AI still appears and where productivity is improving”, Ciralgo can give you that visibility. Pairing Microsoft’s control layer with Ciralgo’s adoption analytics turns AI governance into a measurable, ongoing practice instead of a one-off policy rollout.

One Comment

Comments are closed.