AI Tool Safety Scorecard (2026): What to Check Before You Approve Any AI Assistant

Approving an AI assistant in an enterprise is rarely a “model” decision. It’s a control decision. If your users can paste a customer contract into a consumer chatbot, install a browser extension that reads every tab, or forward meeting transcripts to a random SaaS, your risk is operational. This AI tool safety checklist is designed to help IT and security teams approve tools faster and with fewer blind spots. The scorecard applies to tools like Microsoft 365 Copilot, ChatGPT Enterprise, Claude/Claude Code and Mistral, plus extensions and meeting bots users install without telling you.

The trick: vendor controls matter (SSO, RBAC, audit logs, DLP), but they are not sufficient. “Approved” does not automatically mean “adopted” and it definitely doesn’t mean “safe in practice.” You need both guardrails and visibility into what people actually use.

What “AI tool safety” means in enterprise terms

An enterprise AI tool is “safe” when you can control access, limit data exposure, audit usage, enforce policy and prove it over time, without blocking legitimate productivity.

In practice, that means the tool supports identity and permissions, respects data classification, produces usable logs and fits into your governance and monitoring stack.

Microsoft even frames AI security as a lifecycle: discover, protect and govern AI apps and data. That’s the right model while most organisations focus only on “protect,” then get surprised by shadow usage.

AI tool safety checklist: the scorecard mindset

A practical scorecard does two jobs:

- Procurement filter: “Is this tool even approvable?”

- Operational readiness: “Can we run this tool safely at scale, with the required evidence?”

A common failure mode is approving tools based on policies and promises (“we don’t train on your data”) but missing operational questions like:

- Can we enforce SSO and block personal accounts?

- Can we produce logs that your SOC can use?

- Can we stop sensitive data from being pasted into prompts or exported in responses?

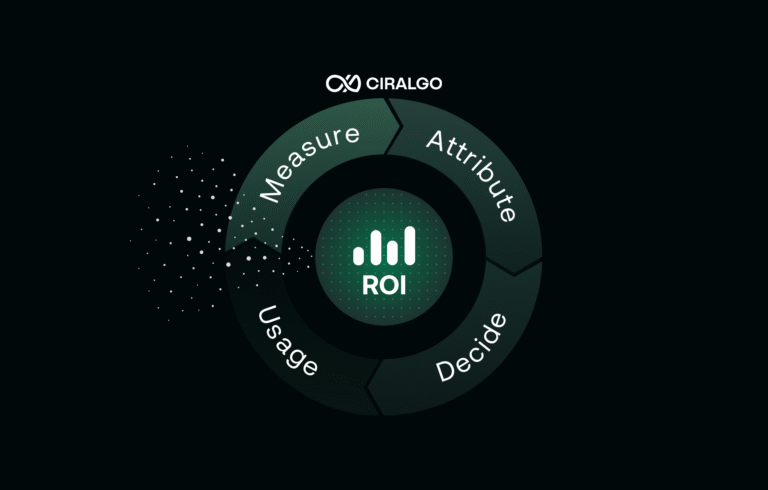

- Can we measure whether people actually moved from shadow AI to the approved tool?

That last point is where platforms like Ciralgo come in: not as a replacement for Microsoft Purview, DLP or vendor controls, but as the visibility and adoption analytics layer that shows real usage across tools, costs, time saved and risk hotspots.

Safety scorecard table

Use this table as your “minimum viable safety specification”. You don’t need every row perfect on day one, but you should be explicit about what’s required, what’s acceptable with limits and what’s a hard no.

|

Criteria |

What “good” looks like |

What to verify |

Red flags |

|---|---|---|---|

|

SSO |

Entra ID / SAML / OIDC, mandatory for all users |

Enforce SSO, disable local accounts, SCIM provisioning |

Personal accounts allowed, no central auth |

|

RBAC |

Role-based access (admin, auditor, user, creator) |

Fine-grained roles, least privilege, delegated admin |

“Everyone is admin” or no roles |

|

Audit logs |

Searchable logs for prompts, access, admin actions |

Log retention options, export/API, user attribution |

No logs, or logs without user identity |

|

DLP support |

Integrates with DLP / classification controls |

Control uploads/copy/paste; policy by label/type |

“We’ll add DLP later” |

|

Data residency |

Clear region selection + contractual clarity |

Where data is stored/processed, subprocessors |

“Global” with no details |

|

Retention controls |

Admin-configurable retention and deletion |

Default retention, legal hold support, eDiscovery fit |

Retention unclear; user-only deletion |

|

Model training policy |

Clear enterprise policy (no training on customer data) |

Contract + product docs alignment |

Vague language, opt-out unclear |

|

SIEM export |

Logs usable by SOC (API/export formats) |

Integration with SIEM/SOAR |

“Screenshots only” reporting |

|

Admin analytics |

Adoption & usage reporting by team/role |

Active users, feature usage, anomalies |

No analytics; only billing totals |

|

Integrations |

Controlled integrations with M365, Jira, etc. |

Permission scopes, app consent, least privilege |

Broad scopes, uncontrolled connectors |

|

Browser/endpoint controls |

Plays well with MDM, browser policies |

Block extensions, restrict unmanaged devices |

Requires unmanaged browser plugins |

|

Vendor certifications |

Security posture evidence |

ISO 27001/SOC2, pen tests, trust docs |

No trust center; “trust us” security |

Approval decision matrix

|

Category |

What it means |

Examples |

Typical controls |

|---|---|---|---|

|

Approved |

Can be used with business data under policy |

Microsoft 365 Copilot, ChatGPT Enterprise or approved internal assistants |

SSO mandatory, DLP policies, logging, training, support model |

|

Tolerated |

Limited use, low-risk data only, temporary |

Niche AI tool for public content and sandbox pilots |

No sensitive data, limited users, time-boxed, review cadence |

|

Prohibited |

Not allowed for work data or enterprise devices |

Consumer AI accounts, unknown browser extensions, tools without logs |

Block domains/plugins, enforce conditional access, coaching messages |

The practical workflow: approve faster without banning everything

Here’s a pragmatic flow that works in real enterprises:

Step 1: Start with “what data could leave?”

Before you review vendors, list your top leakable assets:

- Customer contracts and pricing

- HR/people data (performance, health, recruitment)

- Source code and architecture docs

- Incident reports, vulnerabilities, security controls

- M&A, board decks, strategy documents

Then define the policy line in plain language:

- “Never paste X into any AI tool”

- “Only approved tools can process Y”

- “Sensitive labels require Z protections

Step 2: Decide your control layer

Your control layer is typically a combination of:

- Identity and access (SSO, conditional access)

- Data protection (classification, DLP)

- Endpoint/browser governance (MDM, managed browsers, extension control)

- Monitoring (logs into SIEM, anomaly detection)

This is where vendor-native controls (and Microsoft security guidance) matter, but only if you use them consistently.

Step 3: Decide your visibility layer

Even with perfect controls, reality leaks through:

- Users try prohibited tools

- Teams adopt tolerated tools faster than approved ones

- Productivity gains show up in specific workflows, not everywhere

Ciralgo’s AI adoption analytics platform is designed for this gap: it maps AI usage across tools and teams, ties usage to cost and time saved and highlights where risks cluster, so you can prove governance is working (and fix it when it isn’t).

Why approved tools still fail: shadow AI + lack of measurement

Most “AI tool safety” programs fail for one non-technical reason: they assume policy changes behaviour.

What happens instead:

- The approved tool is slower or less convenient than the consumer one.

- Users don’t understand what is allowed, so they guess.

- Teams build “unofficial” workflows (prompts, templates, meeting bots) outside governance.

- Leadership sees licensing dashboards and thinks adoption is fine, while the real work happens elsewhere.

This is why your governance program needs two parallel tracks:

- Make the safe path the easiest path (approved tools that work for real workflows)

- Measure the real path (what’s actually used, where and with what outcome)

If you’re already dealing with shadow usage in Microsoft 365 environments, start with your “Shadow AI Microsoft 365 Copilot” guide and then come back to this scorecard to standardise decisions across all tools.

EU and GDPR considerations without turning into a legal project

If you operate in the EU (or have EU employees/customers), your AI tool safety approach benefits from being GDPR-aware.

Key points to keep it practical:

- Logging and monitoring can involve personal data (user IDs, prompts, activity patterns).

- You’ll want clear purpose limitation, minimisation, retention discipline and transparency to employees.

- Your policies and controls should produce an audit trail you can explain without sounding like surveillance.

This is not legal advice but it is a reminder that governance evidence matters as much as the technical controls. The European Commission’s data protection overview is a useful starting point for GDPR context. The EU AI Act is also now a formal regulatory framework (Regulation (EU) 2024/1689). Even if your use case isn’t “high-risk,” the direction of travel is clear: more documentation, more accountability, more evidence.

Standardise agents and prompts, not just tools

Tool approval is only half the story. The next bottleneck is: what do people build on top of the tools?

If you don’t standardise:

- Prompt libraries drift into Slack threads and personal notes

- Teams reinvent the same workflows with different assistants

- “Pilot agents” become permanent shadow agents

- You pay twice: duplicate tools + duplicate effort

A practical governance action is to create a single source where approved prompts, agent definitions and workflow patterns live, reviewed, versioned and reusable. That makes your safe environment feel productive, not restrictive.

The AI tool safety checklist

Use this as your minimum bar for approval (adjust to your risk profile).

- SSO is mandatory and personal accounts are blocked/disabled.

- RBAC exists with least-privilege roles and delegated admin.

- Audit logs include user identity and admin actions.

- Log retention is configurable and supports your investigation timelines.

- Export/API exists for logs into your SIEM/SOAR.

- DLP / classification controls can restrict sensitive data flows (upload/copy/paste, connectors).

- Data residency is explicit (storage + processing), with clear contractual commitments.

- Subprocessors are disclosed and reviewable.

- Model training policy is unambiguous for enterprise data.

- Encryption at rest and in transit is documented.

- Admin analytics exist: active users, usage patterns, anomalies.

- Integrations use least privilege (scoped permissions, consent governance).

- Endpoint/browser requirements are compatible with your MDM and managed browser controls.

- Content sharing controls exist (disable public links, external sharing boundaries).

- Incident response process exists (how vendor reports, timelines, contacts).

- Security evidence exists (SOC2/ISO, trust center, pen test posture).

- Rollout plan exists (pilot → scale) with training and clear “do/don’t” examples.

- Measurement plan exists: how you’ll track adoption, workflow impact and shadow AI displacement.

10 procurement and security questions to ask every AI vendor

- Can we enforce SSO for every user and disable local logins?

- What RBAC roles exist and can we restrict who creates agents/workflows?

- What exactly is logged (prompts, outputs, admin actions) and for how long?

- Can logs be exported via API and in what format?

- Where is data stored and processed and can we select region?

- Do you train any models on our data by default? If not, where is that guaranteed?

- What retention controls exist (default retention, deletion, legal hold support)?

- Which integrations/connectors exist and what permission scopes do they require?

- What security certifications and audit reports can you provide (SOC2/ISO)?

- What happens during a security incident, how do we get notified and how fast?

FAQ

Related reading

If you’re building your governance program around Microsoft 365, start here:

- Shadow AI Microsoft 365 Copilot (how to redirect usage into a governed path)

- From pilot to profit (how to connect adoption signals to P&L impact)

Closing

The goal isn’t perfect safety. It’s reducing uncertainty and making governance tangible. A good scorecard helps you approve tools faster. A good control layer reduces leakage. But to stay ahead of shadow AI, you need ongoing visibility so you can prove the safe path is the easiest path and adjust when reality shifts.

If you want to see how AI usage actually looks across your organisation, across Microsoft 365 and beyond, Ciralgo can help you move from “approved in theory” to “governed and adopted in practice.” Book a 20-min call

Disclaimer: This article is for informational purposes only and does not constitute legal advice.