ChatGPT Enterprise Safety Checklist: Retention, Residency and Admin Analytics

If you are rolling out ChatGPT to an enterprise workforce then you need more than a license. You need a ChatGPT Enterprise security checklist that your security team can sign off. In addition you will need a rollout that changes daily behavior. Otherwise shadow tools stay popular and your risk stays the same.

This guide is practical. It focuses on the controls that decide whether ChatGPT Enterprise is safe to scale, where teams still go off script and it ends with a checklist you can run in a week.

What ChatGPT Enterprise safety means in enterprise terms

You are in a good place when you can do four things.

- Control who can access the workspace and what they can do

- Control what data can be entered and how long it stays

- Investigate issues with usable logs and admin reporting

- Prove that shadow AI usage is going down over time

Vendor controls help but they do not guarantee adoption. They also do not guarantee that users stop using personal accounts.

Consumer ChatGPT vs Business vs Enterprise

This distinction is not marketing detail. It changes risk.

- Consumer ChatGPT is personal use. People can still use it for work. They often do. It sits outside your central controls.

- ChatGPT Business and ChatGPT Enterprise are workspace products. They are designed for admin control and compliance commitments. OpenAI describes enterprise privacy commitments for these tiers on its Enterprise privacy page.

The real goal is not to pick the perfect tier. Your goal is to make the governed path the easiest path.

The admin controls that matter

You can group enterprise safety into six control areas. These are the areas that procurement and security should review first.

1) Identity and access controls

Start with how people enter the product.

- Single sign on should be mandatory

- Role based access should be clear

- Admin roles should be limited

- Workspace creation should be controlled

If you cannot enforce workspace access then you cannot enforce anything else. You also end up with duplicate workspaces and inconsistent settings.

2) Retention controls

Retention is the most misunderstood control. In practice retention answers two questions.

- How long are conversations and files kept

- Who can delete them and when

OpenAI states that workspace admins control how long data is retained for ChatGPT Enterprise and ChatGPT Edu. It also notes that deleted conversations are removed from its systems within 30 days unless legal obligations apply.

Your checklist should include a retention decision that fits your risk profile. Short retention reduces exposure. It can also reduce user utility if people rely on history.

3) Data residency

Residency is often a procurement gate. Ask what you need.

- Storage region for conversation content and files

- Processing region expectations

- Subprocessor transparency

OpenAI publishes business data privacy and compliance material that includes data residency options for business customers. Use the OpenAI business data page as your starting point.

If you are EU based then residency is also a trust signal. It does not replace governance. It reduces cross border friction.

4) Data use and model training policy

Ask a direct question. Is your customer content used for training by default. OpenAI describes enterprise commitments on its Enterprise privacy page. Do not stop at the headline. Validate it in contracts and product docs. Make sure your internal policy matches what the vendor offers.

5) Encryption and key management

Encryption is a minimal requirement but it is still important to verify it. OpenAI states it uses industry standard cryptography for business data. It describes AES 256 at rest and TLS 1.2 or higher in transit on its business data page. If your environment requires customer managed keys then validate that path. Also validate how it affects integrations.

6) Workspace governance and admin analytics

Governance is about ongoing operations. You should have the answers to the following questions:

- Who are the active users?

- Which features are used?

- Which teams are adopting?

- Where usage spikes occur?

OpenAI provides a User Analytics dashboard for ChatGPT Enterprise and Edu. The help article describes usage and engagement reports and export options. See User Analytics for ChatGPT Enterprise and Edu. Analytics help adoption. They also help security, but they do not automatically tell you which external tools people still use.

Mini scorecard table

Use this mini scorecard to decide if you are ready to scale.

|

Control area |

What good looks like |

What to verify |

|---|---|---|

|

Access control |

SSO required and roles defined |

Role scope and admin limits |

|

Retention |

Admin set retention policy |

Default retention and deletion rules |

|

Residency |

Region choices available |

Contract language and subprocessors |

|

Data use policy |

Clear no training default for business data |

Written commitments |

|

Encryption |

Strong encryption in transit and at rest |

Vendor documentation |

|

Admin analytics |

Adoption reporting and exports |

User analytics coverage |

Where shadow AI still happens after you buy Enterprise

Buying Enterprise reduces risk. It does not end shadow AI. Here is what still happens in real organizations.

Personal accounts stay convenient

People keep their personal accounts because they are already logged in. They also have saved history and custom bots. They may not know the difference or may not care.

Teams adopt tools faster than procurement

A team finds a niche tool. It solves a pain fast. They start using it in a week. Approval takes months. Shadow workflows appear.

Browser extensions are the silent path

Extensions can capture content. They can also forward text to third parties. Even if you approve ChatGPT Enterprise users can still leak data through extensions.

People route around friction

If the approved tool blocks uploads or lacks a feature then people switch. This is not malicious. It is basic productivity behavior.

Implication: success is not only about vendor controls. It is about behavior change and proof.

The control layer and the visibility layer

OpenAI provides product level controls. That is the control layer. It includes retention options and admin analytics, as well as documented privacy commitments. See OpenAI enterprise privacy page. Most security teams still ask one question. Are people actually using the governed workspace for real work?

That is a visibility question. You need signals across tools and teams.

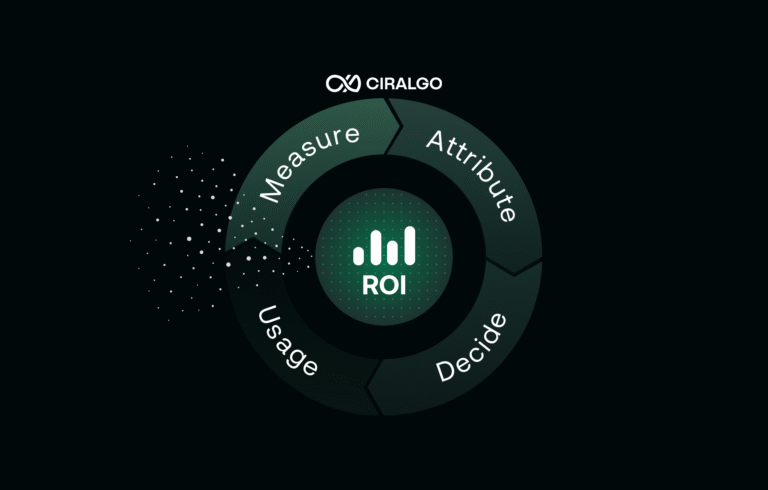

This is where Ciralgo fits. Ciralgo is the AI adoption analytics platform. It provides visibility across tools and teams, shows whether shadow AI is decreasing as ChatGPT Enterprise adoption increases and connects usage to time saved and cost signals.

If you want a governance program that sticks then pair controls with measurement. Also, if you want a measurement story that finance trusts then connect adoption to outcomes. The From pilot to profit perspective helps here.

EU note on monitoring and GDPR

If you operate in the EU then monitoring can involve personal data. User IDs and prompts can be personal data in context. Your logging program must be transparent. It must also be proportionate. A practical approach is to define purpose. Use minimisation and define retention. Also communicate clearly to employees. For a plain starting point use the European Commission data protection page.

For your own posture describe your approach with regard to Security & Trust. Keep it enablement focused and avoid surveillance framing.

Checklist

- Decide your approved use cases and your prohibited data types

- Enforce SSO and restrict admin roles

- Define workspace ownership and support model

- Set a retention policy that fits your incident response needs

- Document deletion expectations for users and admins

- Confirm data residency options and capture them in procurement docs

- Confirm enterprise privacy commitments and data use policy

- Validate encryption claims and key management needs

- Enable User Analytics and define who reviews it weekly

- Decide how you will export and retain adoption reports

- Create an Approved Tolerated Prohibited register in AI Adoption Hub

- Publish a short user policy that explains safe prompts and unsafe prompts

- Train your pilot group with real examples and role workflows

- Track shadow tool usage trends with your visibility layer

- Review friction points and remove them fast

- Reassess monthly since tools and behaviors change

For a cross tool framework reference AI Tool Safety Scorecard (2026).

Procurement and security questions

Ask these questions in every vendor review.

- Can we enforce SSO for all users

- What roles exist and what can each role do

- What is the default retention and can we change it

- What happens when a user deletes a chat

- What residency options exist for our region

- Is customer content used for training by default

- What encryption is used in transit and at rest

- What admin analytics exist and can we export them

- How do we detect policy violations and investigate incidents

- How will we reduce personal account use and shadow tool use

If you also use the API then you need a separate review for data controls. OpenAI documents Zero Data Retention options for eligible customers. See Data controls in the OpenAI platform.

Closing

ChatGPT Enterprise can be a strong governed option. It still needs an admin baseline and it also needs a rollout that removes friction. If you want a faster path then centralise your tool register in AI Adoption Hub. Add measurement through the AI adoption analytics platform. Then you can show adoption and risk trends in one view.

If you want to map your current usage and find the biggest gaps then Book a 20-min call.

Disclaimer: This article is for informational purposes only. It is not legal advice.